Amid all the noise about AI in adult learning – loud, constant, and often reactive – there’s a quieter shift we may be overlooking: the subtle erosion of human wisdom in favour of machine certainty.

I recently reread the 2021 Human Augmentation paper published by the UK Ministry of Defence and Germany’s Bundeswehr. The report explores how human capabilities are increasingly entangled with technology, not just in theory, but in policy and practice. What jumped out at me was the author’s call for critical engagement over passive adoption. As AI tools become embedded in learning environments, this warning feels especially urgent for learning and development (L&D) practitioners.

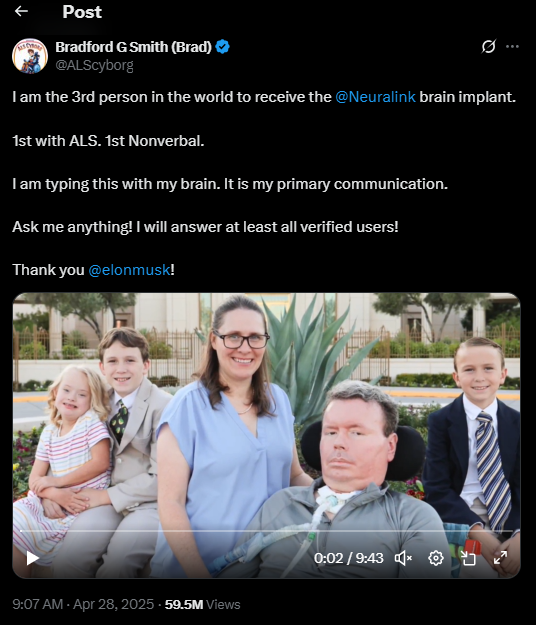

Just four years later, we’re witnessing the realities of human capability and tech unfolding. Neuralink, Elon Musk’s brain-computer interface company, recently completed its first successful human clinical trial implant. Other brain-computer interfaces have been trialled before. But this marks Neuralink’s first human patient: a man with ALS who can now control a computer cursor with his mind. He wrote his post on X typing with his thoughts!

A truly powerful reminder that what once seemed speculative is quickly becoming operational. The question is no longer whether AI and augmentation will change how we live and learn. It’s whether we’ll retain the agency to shape that change, or quietly surrender to it.

It raises a question we can no longer avoid: are we designing systems that support human agency, or slowly replacing it?

The Defence Planning report discussed the accelerating integration of science and technology into human performance, and the need to engage critically, not just accept innovation unthinkingly. It struck me that we’re facing similar crossroads with Artificial Intelligence (AI) in adult learning and society more broadly.

The Wizard Behind the Curtain: A Lifelong Lesson

Are you familiar with that moment in The Wizard of Oz when Dorothy pulls back the curtain and discovers the great and powerful wizard is just a man, hiding behind machinery and smoke? It’s a powerful image because it’s not just a twist in the story. It’s a reminder of how easily we can mistake spectacle for truth.

Throughout history, humans have longed for certainty, often placing their trust in forces larger, louder, and more confident than themselves. From ancient rituals of sacrifice to the icons of modern technology, we’ve sometimes been willing to trade agency for the illusion of control, or freedom for the comfort of knowing someone, or something, appears to have the answers.

Much like the wizard behind the curtain, AI can appear all-knowing and authoritative. But the truth is often far more complex, and far less visible. Which is why, alongside all its promise, we face a profound responsibility: to ensure these tools support, rather than substitute, human autonomy and critical thought.

A New Paradigm: From Information Access to Cognitive Partnership

For centuries, technology has served as a tool, extending human memory and expanding access to information. AI, however, is no longer just a tool. It’s becoming a cognitive partner – an unseen hand that predicts needs, shapes choices, and increasingly makes decisions on our behalf.

In education, AI-powered platforms promise personalisation at a scale once unimaginable. They can identify learning gaps, forecast knowledge pathways, and adapt content in real time. In workplace learning, AI is helping organisations build dynamic, on-demand knowledge ecosystems.

But beneath all this promise lies a harder question: are we still holding the reins of our own learning, or quietly handing them over to a ‘wizard behind the curtain’? A system whose workings we barely understand. Even tech leaders like Sam Altman, Sundar Pichai, and Dario Amodei have admitted they don’t fully understand how their own AI models work. A striking reminder that what appears seamless is often far from transparent.

I suppose I’ve always been uneasy with the idea that certainty should be handed to us, especially when it’s wrapped in confidence and spectacle. It’s important to ask: Who’s actually running the show? (Or, if we’re honest, most of the time it’s more like: who’s driving the clown car?)

Today, I see echoes of that same impulse in how we approach AI. As is often the case, especially in EdTech. what we’re presented with isn’t always what it seems.

The All-Knowing Illusion: Grok and the Search for Certainty

Already, on platforms like X (formerly Twitter), users routinely ask Grok or other AI systems, to confirm or deny the truth of posts, treating machine outputs as the final word. What was once a marketplace of discussion and critical exchange whether in agreement or not, risks becoming a single-channel broadcast of machine-certified ‘truths’ from a hallucinating drunk ‘tech’ uncle with a simple response of @Grok in the comments.

AI doesn’t possess knowledge in any human sense. It doesn’t reason, reflect, or understand context. It processes patterns in data, which is remarkable, but inherently limited.

In this behaviour, we glimpse something much older than technology itself. We’re reverting to an ancient human impulse: the longing for an all-knowing, all-seeing authority we can offer our uncertainty and fear to. Like the citizens of ancient Greece, who sacrificed to capricious gods in hopes of certainty, we now offer our trust and even our children, to the high priests of technology.

Today’s sacrifices are quieter, but no less profound:

– Devices are placed in toddlers’ hands because tech titans have declared it the future.

– Algorithms now curate what teenagers see, believe, and aspire to.

– Meanwhile, our elders, shaped by decades of passive trust in mainstream media, often believe much of what they encounter online as verbatim truth.

We’re feeding the next generation and the last, into systems we barely understand, convinced that this is progress, because the ‘wizards’ have told us so.

Surrendering the Power to Think: Cognitive Sovereignty at Risk

When we ask AI to tell us what’s true, rather than using it as a tool to help us think, we risk surrendering the very essence of learning itself. AI is not a new god; it’s a mirror, reflecting our collective values, biases, and desires, both enlightened and unconscious.

The deeper risk of AI’s role in learning is not technical; it’s philosophical. By treating AI as an infallible source of knowledge, we risk quietly surrendering our cognitive sovereignty: the right and responsibility to think, judge, and decide for ourselves.

The danger is not that AI will force us into passivity, but that we’ll choose it. As AI becomes more seamless, intuitive, and persuasive, the temptation to accept its outputs uncritically will grow. Education must therefore equip learners not just to use AI, but to question it constantly.

We must pierce the illusion of omniscience and demand transparency, accountability, and humility from the systems we create. I know I might sound a little Frasier Crane there, but I prefer to think I’m aligning more with Daniel Kahneman: we need to be paying attention to the slow, often invisible ways our thinking is shaped, and choosing to remain intentional.

Towards Human-AI Teaming in Learning

The future of learning will not belong to those who adopt AI the fastest. It’s going to belong to those who most effectively integrate the unique strengths of humans and machines, while preserving the distinctly human capacities for critical thought, creativity, and ethical judgement.

Reclaiming Human Strength in an AI World

And our goal has to be not to outsource thinking to AI, but to consciously, critically, and collaboratively amplify human learning. As Arianna Huffington reminds us, “To succeed in this new world, we need to be more creative, more curious, and embrace lifelong learning, because machines can’t replicate that (yet).”

Conclusion: Shaping the Future, Not Being Shaped by It

AI and human learning are on the cusp of profound transformation. Like human augmentation, AI offers enormous promise, but only if we take an active role in shaping its purpose and limits.

This might be my quiet rage-against-the-machine moment, not driven by panic, but by a deep need to protect what makes learning truly human. We must resist the comforting illusion of the wizard behind the curtain, and we’re almost obliged as elders to teach learners not to worship AI, but to question it relentlessly and to strengthen, not surrender their agency.

The choice before us is simple yet profound:

The curtain is already moving. What and who will we choose to see?

Will AI be a tool that illuminates new possibilities or a new deity we unthinkingly obey without question, surrendering the most human of all powers: the ability to think for ourselves?

It’s not just about what we know, but how we think, relate, and respond: the skills that truly define our humanity and drive real learning.

Pingback: Not Quite Game Over: A Response to "Shit’s Gonna Get So Fucking Weird and Terrible" - LX Design Agency